- E2E tests as a topic always create extraordinary gravity around it. So, it was no surprise that the latest episode of the Quality Bits podcast with Bas Dijkstra resulted in 2x reactions and comments compared to the previous one with Alan Page and Modern Testing (even though I would recommend the latter more to the specialists I work with), and most of other episodes. Oh, whatever, E2E is magnetic, it is everywhere, so availability bias is the one to be blamed. Nonetheless, I would like to give you my two cents and extend this post inspired by some ideas shared during that episode (quotes are not exact, but more of the general ideas). Hence, let’s break down some advice I found dangerous (as it can be easily misinterpreted) for a typical software engineering project as a context.

- “Write more unit tests as they are easier to write.” This statement is mostly correct, so “Where is the danger?” you may ask. And I will answer – it is in the word “easier”. I see a lot of testing tools (low-code, no-code, Cypress, etc.) playing this card in their advertising, but wait – easy and fast is not always the right thing. Moreover, what if I say that the tests are not the goal at all? The real goal is to build confidence in the way we build software, and automated tests just happen to be often seen as the best solution in a context where teams try to move fast and not to break things (I know they say it otherwise in Meta). So, if you think about confidence, about decision making, and if ask you to make some decisions for our team, and if you care not to break something as well, you probably will find yourself in need for more information to clarify things and understand them better. Therefore, I understand why most junior-ish test automation specialists begin by writing tests at UI level (even though it is “harder” to write and maintain, especially, when one is learning programming at the same time) – I see how system passing a single UI test scenario (i.e. full user journey via single workflow) can give more (or different) confidence to that junior-ish specialist than seeing 60 “atomic” component/contract tests passing at the unit level. As the specialist mature, I would definitely expect them to learn more about system infrastructure, architecture, APIs, and contribute to API and unit tests, but not because it is easier or faster, but because that specialist can now clearly understand how it is more efficient (and necessary in the present context) to distribute the tests without losing confidence.

To sum up:- Easier and faster are not always the right thing.

- Tests are not the goal – the main goal is to build confidence in the way the team works (and the product itself).

- “E2E tests are cherry on the top, so write them at the end.” This one contradicts modern software development practices and reminds me of Waterfall (or Linkin Park?). Treating requirements as hypotheses/assumptions and seeking end-user/client validation a lot of times it is important to deliver feature MVP/prototype as soon as possible (to get feedback and adjust, re-iterate). For the sake of collecting early feedback one can consider not caring about the overall look and feel, but at least have it functional and accurate (it depends, but the main idea is to focus on some quality attributes first postponing some other quality attributes to be polished later if hypothesis succeeds and feedback is positive). Most of the time it means that E2E test should be there already while we are experimenting with that feature, collecting data or feedback as typically life does not stop because of such “experiments” and other team members will be still adding their progress in parallel there.

So, maybe that “cherry on the top” idea was not about adding it at the end, but still about proportions? Previously, I heard that “cherry on the top” metaphor assigned for visual tests as in theory (and practice!) they are even more expensive and brittle than E2E tests, so that visual tests cherry was added at the top of three-level unit/integration/E2E tests cake (for proportions).

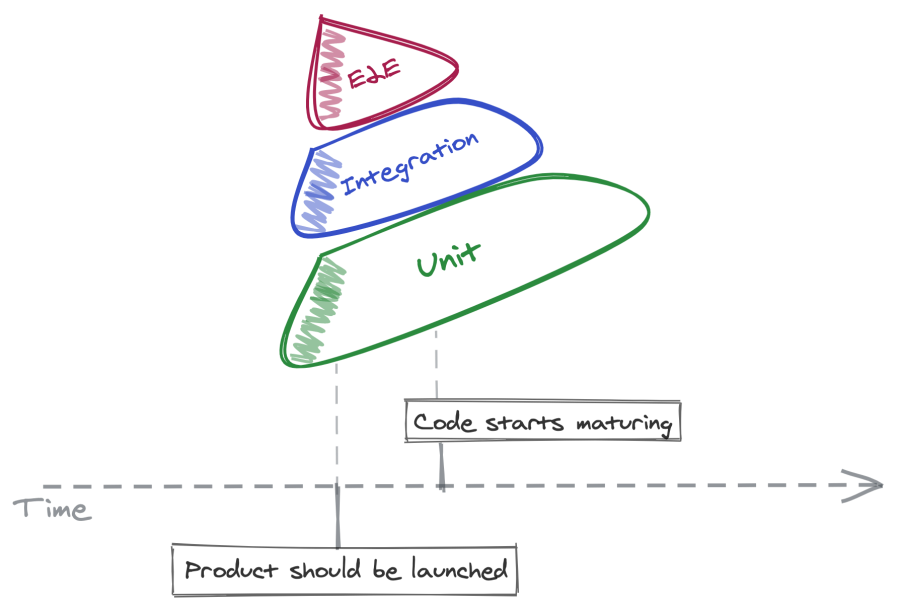

Talking about cakes (as Bas and Lina did that too), I really like “The Testing Pavlova” concept (from “Google, You Might Be Wrong Now”), especially, how it explains unit/integration/E2E distribution dynamics over time – as that makes perfect sense – suggesting efforts across unit/integration/E2E go hand in hand and distribution gets to pyramid-ish shape only when code matures:

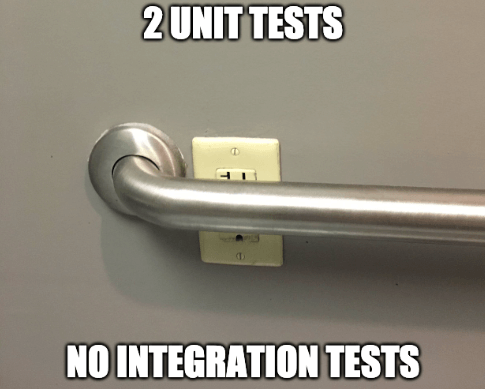

- “E2E tests can be broken down into lower level tests.” It refers to the original article and Bas clarifies explicitly in the article that it does not mean eliminating all the E2E tests completely. However, the missing piece there is why testing at various levels is needed. There will always be a risk that having and maintaining unit and integration/contract tests only will not catch a change breaking the flow at E2E level if you do not have a respective test at E2E level.

The internet is full of memes referring to such situations and I assume you know better memes than me. Bringing some simple joy to life of testers, from time to time that happens even to the best of teams, and that is no surprise in an ecosystem of frequent changes and many contributors some change of individual unit or component slips and breaks a bigger picture. How confident do you feel about your unit and contract tests catching all such things in the environment you work in?

- “Typical web apps have many interfaces and you can test most scenarios via API.” Yes, this is true. But the danger with such statements is that sometimes they can be seen as implying that testing scenarios at the API level are enough. However, end-users most likely will not be using API – our typical project context will deliver a JavaScript application that will be used by the end-users in their browsers. Therefore, we will have front-end and back-end applications as separate web services that will have their separate lives, separate CI/CD pipelines, and sometimes even separate teams developing them.

For the sake of simplicity, let’s say there is 1 front-end service (JS app) and 1 back-end service (API gateway connecting to some database) composing this web application. To achieve CI/CD and be able to release them separately they should be completely decoupled connecting to each other only via exposed interfaces (contracts). Completely undependable. To some extent, we can see the front-end and back-end as two separate products – the back-end application should be fully functional and ready to be used via its API endpoints, and the front-end application should know how to wrap around back-end API endpoints to provide end-user an intuitive UX within their browsers.

To achieve CI/CD the back-end pipeline should have all the automated tests ensuring that the back-end service being delivered will not break anything. Therefore, the back-end pipeline will most likely contain some API tests at the scenario level (chains of API requests to examine full flows), some more API tests at the atomic level (endpoint as a unit), and some more integration, contract, and unit tests at code (methods/functions) level.

Similarly, the front-end pipeline should have all the required automated tests ensuring that the front-end service being delivered will not break anything. It can assume the back-end service works fine as it was already tested in the back-end CI/CD pipeline and passed all its tests there. Now, the question is whether the front-end application knows how to deal with back-end API endpoints as well as front-end components in a composition. Therefore, the front-end pipeline will most likely contain some UI tests at the scenario level (a.k.a. E2E tests), some more UI tests at the atomic level (to invoke some UI components or paths that were not covered with full scenario UI tests), and some more integration, component, contract, unit tests at code level.

Hopefully, that clarifies a thing or two.